Privacy

Gretel and Google Cloud partner on synthetic data for safer generative AI adoption

Gretel partners with Google Cloud to harness the power of synthetic data and accelerate safer generative AI adoption in the enterprise.

Read more...

How to Generate Synthetic Data: Tools and Techniques to Create Interchangeable Datasets

Synthetic data is algorithmically generated data that mirrors the statistical properties of the dataset it’s based on. Learn how to make high-quality synthetic data.

Read more...

Gretel Unlocks PII Detection with Synthetic Financial Document Dataset

Gretel releases a new synthetic financial document dataset to empower AI developers in building customized and highly performant sensitive data detection systems.

Read more...

Synthesizing Private Patient Data with Gretel: A Step-by-Step Guide

Create privacy-safe synthetic patient data with Gretel, ensuring compliance, secure sharing, and actionable insights for AI and machine learning in healthcare.

Read more...

Quantifying PII Exposure in Synthetic Data

How to measure and minimize personally identifiable information (PII) risk in synthetic data.

Read more....png)

Generate Differentially Private Synthetic Text with Gretel GPT

Safely leverage sensitive or proprietary text data for advanced language model training and fine-tuning

Read more...

Introducing Gretel Tabular DP: A fast, graph-based synthetic data model with strong differential privacy guarantees

Gretel Tabular DP is a fast and powerful new model to generate high quality tabular synthetic data with mathematical guarantees of privacy

Read more....png)

How to Create Synthetic Data at High Quality for Fine-Tuning LLMs

Gretel Navigator’s synthetic data generation outperformed OpenAI's GPT-4 by 25.6%, surpassed Llama3-70b by 48.1%, and exceeded human expert-curated data by 73.6%.

Read more...

Privacy-preserving AI development with Azure & Gretel

Leveraging Gretel's privacy-preserving synthetic data generation platform to fine-tune Azure OpenAI Service models in the financial domain.

Read more...

Red Teaming Synthetic Data Models

How we implemented a practical attack on a synthetic data model to validate its ability to protect sensitive information under different parameter settings.

Read more....png)

Fine-tuning Models for Healthcare via Differentially-Private Synthetic Text

How to safely fine-tune LLMs on sensitive medical text for healthcare AI applications using Gretel and Amazon Bedrock

Read more...

Test Data Generation: Uses, Benefits, and Tips

Test data generation is the process of creating new data that replicates an original dataset. Here’s how developers and data engineers use it.

Read more...

Teaching large language models to zip their lips with RLPF

Gretel introduces Reinforcement Learning from Privacy Feedback (RLPF), a novel approach to reduce the likelihood of a language model leaking private information.

Read more...%20(2).png)

Gretel’s New Data Privacy Score

Gretel releases industry standard synthetic tabular data privacy evaluation and risk-based scoring system.

Read more...

AWS + Gretel Synthetic Data Accelerator Program for Generative AI

How our new Synthetic Data Accelerator Program with AWS will help enterprises scale responsible AI systems fast.

Read more...

Differential Privacy and Synthetic Text Generation with Gretel: Making Data Available at Scale (Part 1)

How differential privacy can generate provably private synthetic text data for a variety of enterprise AI applications.

Read more...

We just streamlined Gretel’s Python SDK

Discover the streamlined Gretel Python SDK. Start building with synthetic data in just 3 lines of code 🚀

Read more...

Predicting Patient Stay Durations in the ER with Safe Synthetic Data

Here's how a hospital uses Gretel to help forecast staffing and resource needs for their emergency care unit, and to identify emerging trends in outbreaks.

Read more...

Gretel is live on Google Cloud Marketplace 🎉

Gretel’s suite of privacy-enhancing tools and generative AI models are now available on Google Cloud Marketplace.

Read more...

Augmenting ML Datasets with Gretel and Vertex AI

How to utilize Gretel to create high-quality synthetic tabular data that you can use as training data for a classification model in Vertex AI.

Read more...

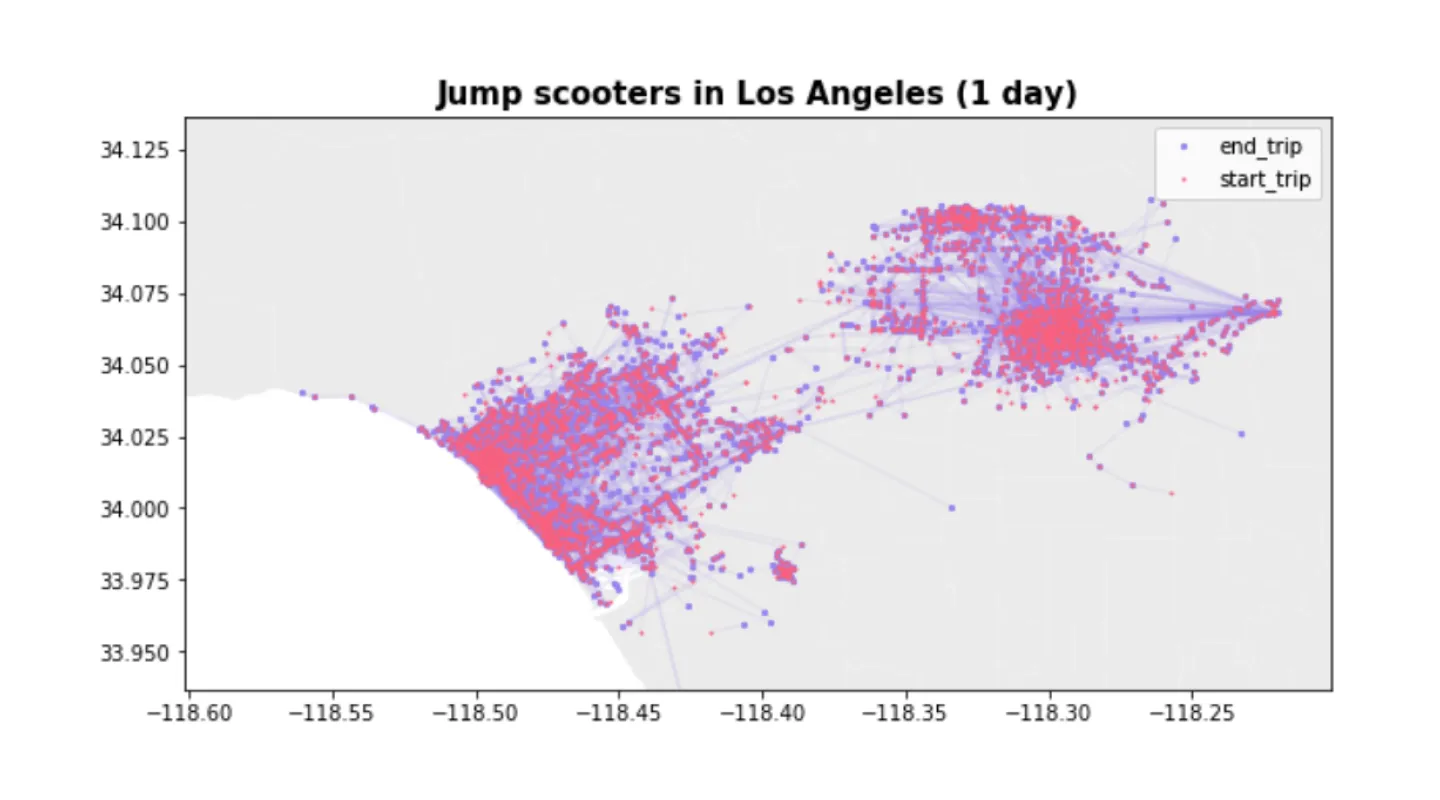

Using generative, differentially-private models to build privacy-enhancing, synthetic datasets from real data.

We’re going to train and build our synthetic dataset off of a real-time public feed of e-bike ride-share data called the GBFS (General Bike-share Feed)

Read more...

Practical Privacy with Synthetic Data

Implementing a practical attack to measure un-intended memorization in synthetic data models.

Read more...

Common misconceptions about differential privacy

This article clarifies some common misconceptions about differential privacy and what it guarantees.

Read more...

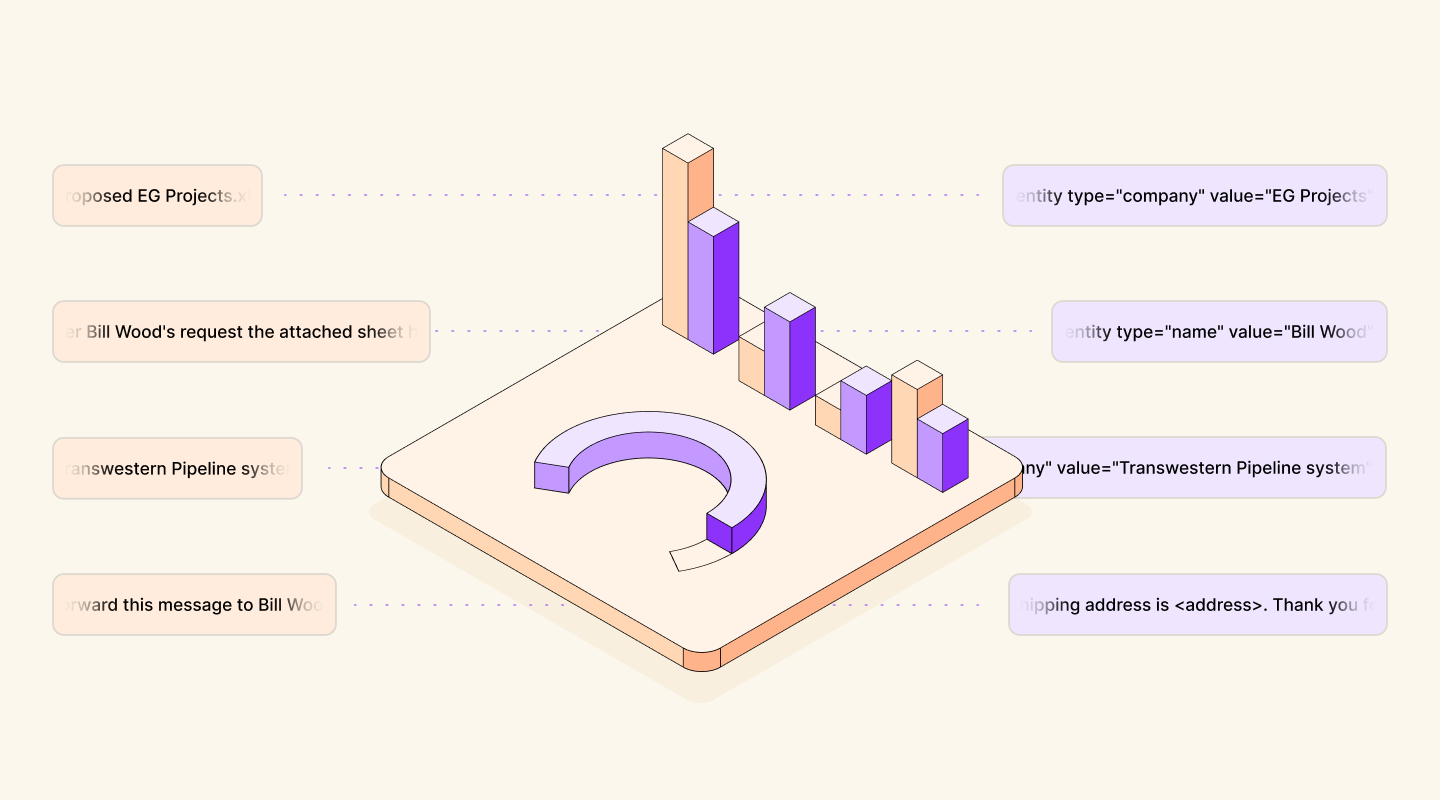

Got text? Use Named Entity Recognition (NER) to label PII in your data

Use Gretel’s NLP setting to label PII including people names and geographic locations in free text.

Read more...

Create artificial data with Gretel Synthetics and Google Colaboratory

Use Gretel Synthetics and Colaboratory’s free GPUs to train a model to automatically generate fake, anonymized data with differential privacy guarantees.

Read more...

Automate Detecting Sensitive Personally Identifiable Information (PII)

Use Gretel.ai's APIs to continuously detect and protect sensitive data including credit cards, credentials, names, and addresses.

Read more...

Recognizing Data Privacy Day by Protecting Your Privacy

What if we could ensure that personal data was protected, benefiting not just the individual but also giving developers faster, worry-free access to data?

Read more...

Create high quality synthetic data in your cloud with Gretel.ai and Python

Create differentially private, synthetic versions of datasets and meet compliance requirements to keep sensitive data within your approved environment.

Read more...

Automated Data Exposure Detection with Gretel Outpost

Gretel Outpost is a free integration architecture that automates the steps that a security team would take in assessing the risk or exposure to data.

Read more...

Veterans Day Reflections: Open source software and evacuation operations, a remarkable combination.

Quickly and safely aggregate geolocation data for location density analysis using a hexagonal grid system.

Read more...

Fast data cataloging of streaming data for fun and privacy

Learn more about how Gretel's REST APIs automatically build a metastore that makes it easy to understand what is inside of your data.

Read more...

What is Privacy Engineering?

In this post, we will dive into what privacy engineering is, why it’s important, and some of the core use cases we are seeing that are enabled by privacy.

Read more...

Introducing Gretel's Privacy Filters

Create synthetic data that’s safer than ever. Our simple configuration file settings enable you to secure both your data and model from adversarial attacks.

Read more...

Gretel Synthetics: Introducing v0.10.0

Explore how to create a batch interface with the latest version of Gretel Synthetics on Google Colaboratory.

Read more...

What We’re Reading: Trends & Takeaways from the NeurIPS 2021 Conference

The Gretel research team's favorite trends and takeaways from the NeurlPS 35th Annual Conference on Neural Information Processing Systems.

Read more...

What's new in Beta2

Beta2 for Gretel.ai is all about delivering privacy engineering as a service through clean, simple APIs.

Read more...

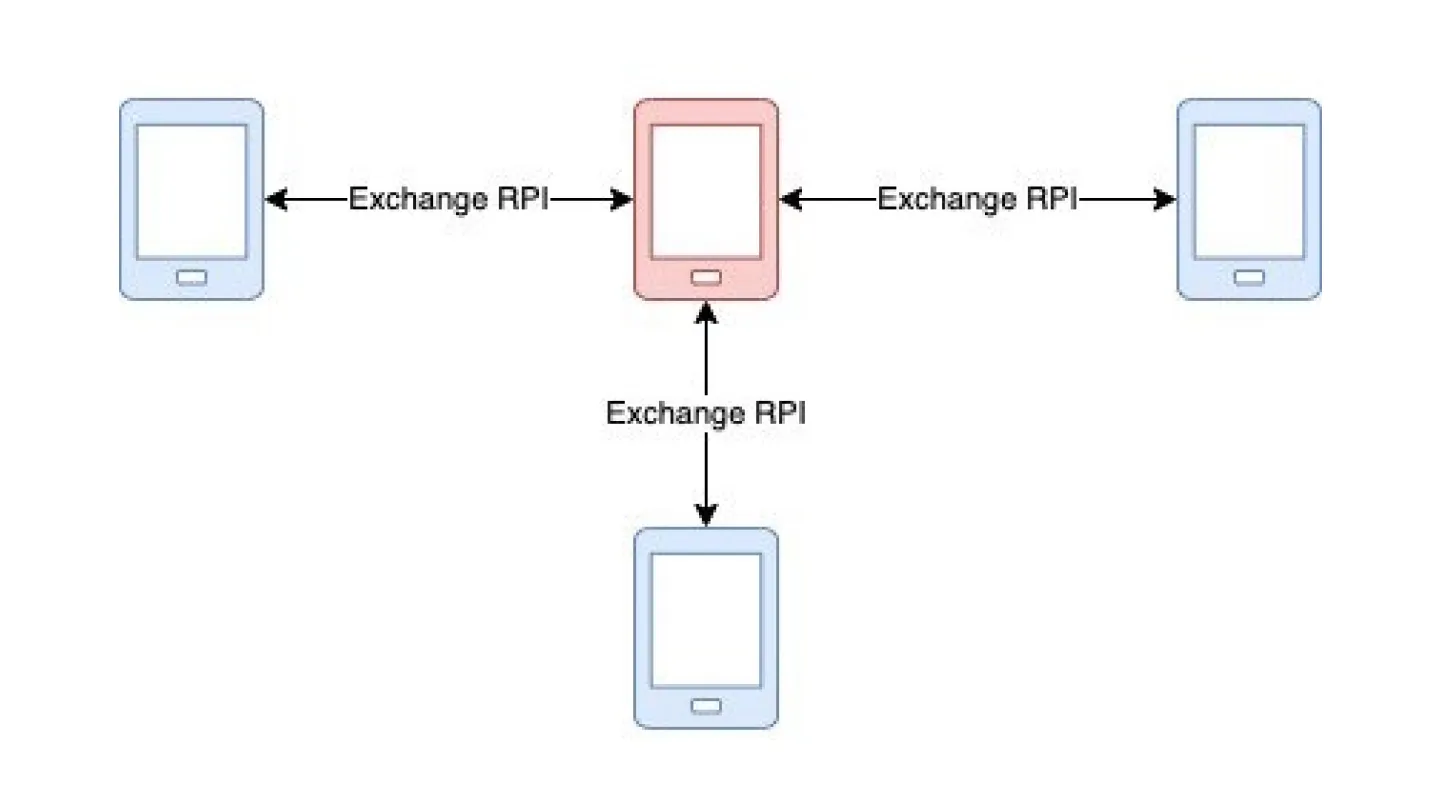

Contact Tracing: Deep Dive & Simulation

We decided to examine the privacy preserving capabilities of the Contact Tracing proposal, how it would be implemented, and what privacy concerns exist.

Read more...

Deep dive on generating synthetic data for Healthcare

Take a deep dive on training Gretel’s open-source, synthetic data library to generate electronic health records that protect individual privacy (PII).

Read more...

Advanced Data Privacy: Gretel Privacy Filters and ML Accuracy

A look at how using Gretel’s Privacy Filters to immunize synthetic datasets against adversarial attacks can impact machine learning accuracy.

Read more...