Red Teaming Synthetic Data Models

May 8, 2023 update: The DP Model referenced in this blog post is Gretel LSTM with differential privacy (DP). Since this post, we've released Gretel Tabular DP, a fast and powerful model with differential privacy built-in, and have deprecated the DP support in Gretel LSTM in favor of this new model.

Introduction

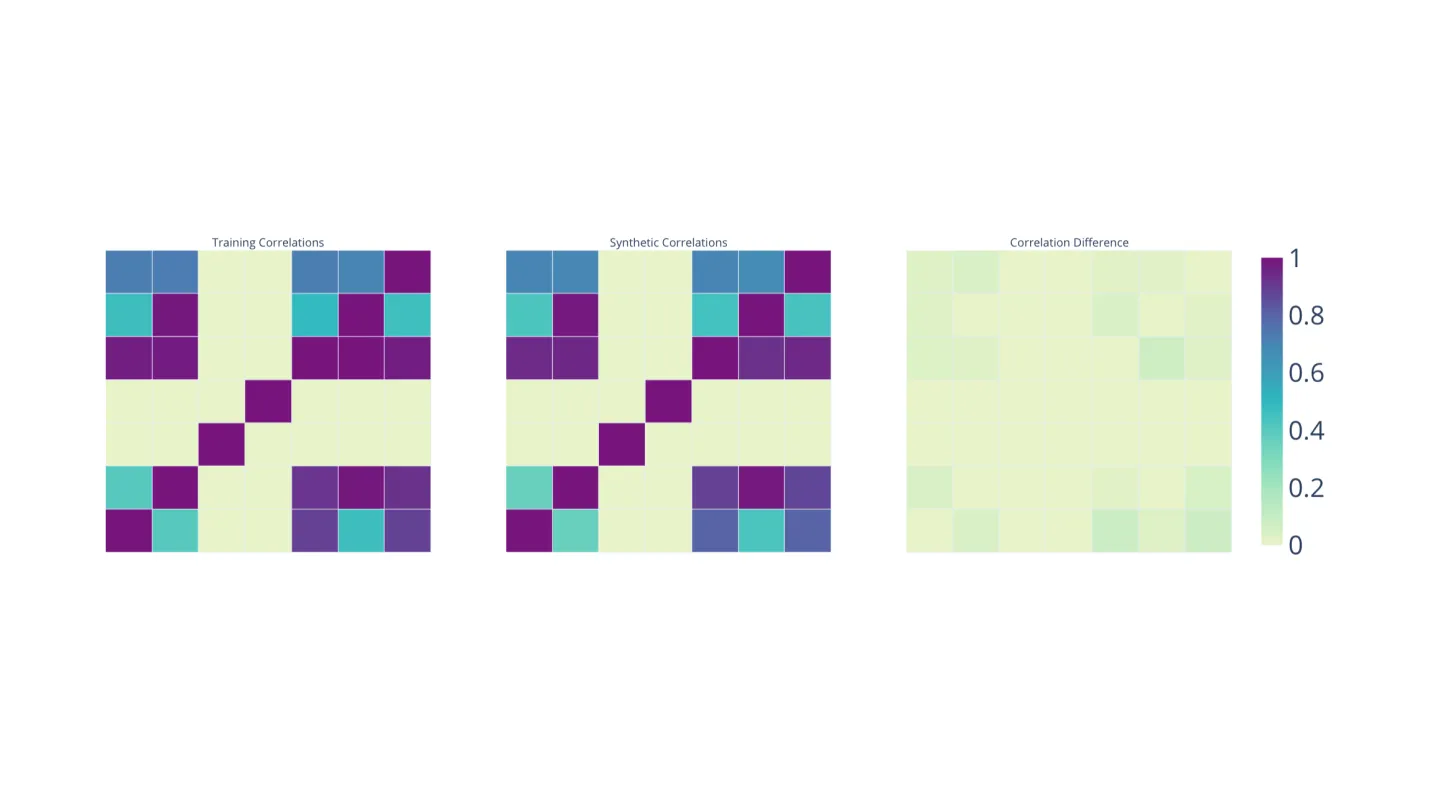

Data privacy is one of the biggest concerns when it comes to ML models learning features from sensitive information. At Gretel, to prevent these risks, we use synthetic data models to generate artificial datasets that have very similar insights and distributions as the real-world data, but include enhanced privacy protections. In this post, we will implement a practical attack on synthetic data models that was described in the Secret Sharer: Evaluating and Testing Unintended Memorization in Neural Networks by Carlini et. al. We will use this attack to demonstrate the synthetic model’s ability to protect sensitive data. At the end, we compare the results of various model parameter settings, including differential privacy, and find some fascinating results!

You can follow along with our notebook here:

The Dataset

In this example, we’ll work with a credit card transaction fraud detection dataset from Kaggle. It includes 1.3 million legitimate and fraudulent transactions from simulated credit card numbers.

We select four informative yet sensitive features from the dataset: the last four digits of the credit card number, gender, and the first and last name of the card owner. We consider a random sample of 28,000 rows to limit runtime of the experiments. Why did we choose these features?

Credit card numbers contain sensitive information about the owner. We simplify the credit card numbers to the last four digits since it is commonly used and there is a mixed distribution of the credit card number length.

There are more than 860 unique credit card numbers in the training dataset. Including the first name, last name, and gender of the card owners can reduce the risk of generating the same exact records. There’s a small probability of generating the same credit card number, but it is even less probable in a synthetic model to replay the same credit card number with the same user's first and last name.

The following is the sample of the dataset with all features:

Here’s a sample of 28,000 records with selected features from the above dataset. We use this as the training dataset:

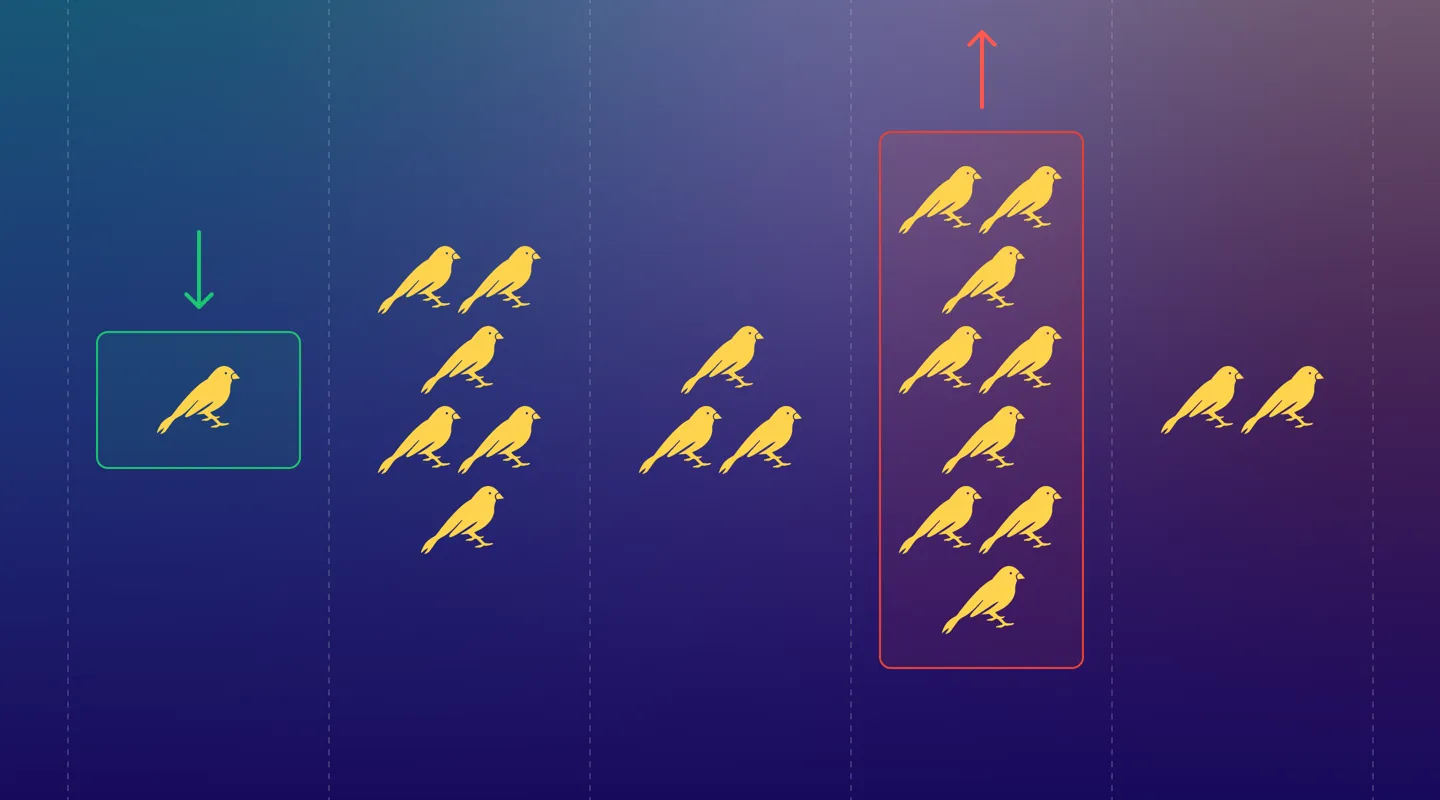

The Attack

A practical method for testing if the synthetic data is protected from adversarial attacks is by measuring the model’s memory when generating secret values, i.e., “canaries”. The more canaries generated, the better the model is at memorizing sensitive information and the more prone the dataset is to adversarial attacks. You can read more about various types of attacks in this blog.

Let’s start with generating some canaries with various counts. The secret values are unique and in the same format as the training dataset. We randomly sample the training set and insert the canaries. Here is a sample of the code we used:

Let’s then look at the canary values and their count in the 28,000 records of the training dataset:

Now, we run our experiments. We use four different neural network and privacy settings on the synthetic model to generate the dataset. Each model’s performance is evaluated on overall prediction accuracy and given a synthetic quality score (SQS) from 0-100. You can read this blog for more info on how we determine synthetic data quality. Let’s look at the models and how they did:

- Vanilla Model: In this model, we used the standard synthetic data configurations without differential privacy. This is the benchmark experiment. We compare the results from the rest of the models with this.

- DP Model: We used differential privacy (DP) with a small value of differential privacy noise multiplier. The accuracy and SQS remains almost the same, while the epsilon is high.

- Noisy DP Model: DP with a high noise multiplier is used in this model. We achieve a low epsilon value while sacrificing some accuracy. The overall SQS is still good.

- Filtered DP Model: In this model, the two privacy filters are set to “medium” and DP is used. The accuracy remains the same as the DP model with a small drop in SQS.

For more consistency, we visualized the results with box-plots showing 5-95 percentile from 100 trials for each canary value and model. In this figure, wider box-plots depict more variability in the generated number of canaries, resulting in less preserving the private data.

Since the count of canaries #1 and #2 in the training data is relatively small, all 4 models (almost) did not generate them in the synthetic data.

While for canary #3 and #4, the distribution is mostly dense around the median for all models except the “Filtered DP Model”. The “DP Model” and “Noisy DP Model” do a pretty good job of not replaying canaries counted ~38. In this case, depending on the user’s expectation from the model on either performance or generating a low epsilon, we choose each in order.

In the last row, comparing the models in memorizing relatively high counted canaries, the “Noisy DP Model” does not generate the secret values while the “Filtered DP Model” replays the maximum count among all models. As mentioned before, it’s always about balancing the privacy guarantees of the secret data with the overall model accuracy. Here, the best choice for maximizing accuracy and privacy protection is the “DP Model”.

Now, we would like to define the “maximum value”, which indicates the maximum canary counts in the training dataset that the synthetic model is able to not generate with a small number of canaries leaking in the generated dataset (error). In the following, we set the error value between (1, 3). The higher the maximum value, the better the model is in not memorizing and replaying the canaries.

We consider the median for each model upon all canaries (rows) from the figure above when comparing the maximum values:

- Vanilla Model: Achieves the best accuracy while preserving the lower counted canaries. The maximum value is 56 with the error of 2.

- DP model: Has a reasonable privacy guarantee for canaries counted between case #4 and #5, resulting in a relatively high maximum value of 100. The performance remains reasonable.

- Noisy DP Model: Best privacy guarantee while sacrificing on the model’s accuracy. The experiments show the maximum value of 145 with error 1 for this model.

- Filtered DP model: Has almost the same overall maximum value as the Vanilla model ~56. The Vanilla Model has smaller error and achieves better results in higher canary counts.

Conclusion

As demonstrated above, models with various parameters and privacy settings can be used depending on the user's priorities in protecting the secret values and achieving the desirable performance. In the scenario where the model's performance is extremely more important than protecting the sensitive values, we use the “Vanilla Model”, while in the reverse scenario we use the “Noisy DP Model”. There are also cases where the performance and privacy are both equally important when we use the “DP model”. If this is exciting to you, feel free to join and share your ideas in our Slack community, or reach out at hi@gretel.ai.