Introducing Gretel's Privacy Filters

We're excited to announce the release of Gretel Synthetic's new Privacy Protection mechanisms. Now, on top of the privacy inherent in the use of synthetic data, users can choose to add supplemental protection by means of a variety of privacy protection mechanisms (some new, some have long existed). The use of these mechanisms helps to ensure that your synthetic data is safe from adversarial attacks.

What are the privacy risks surrounding synthetic data?

Here at Gretel, a priority research area for us is to stay on top of the ever-growing variety of attacks used by adversaries seeking to gain insights into private data. Each attack requires various levels of access to training data, machine learning models, or data created by the models. Common examples of adversarial attacks on data include:

- Membership Inference: infer whether or not a given record is present in the training set

- Attribute Inference: infer sensitive attributes of a record based on a subset of attributes known to the attacker

- Memorization Attacks: exploit the ability of high capacity models to memorize certain sensitive patterns in the training data

- Model Inversion: the capability of the adversary to act as an inverse to the target model, aiming at reconstructing the inputs that the target had memorized

- Model Extraction: create a substitute model of the target system to avoid paying for the target system or to launch additional attacks on the original model

- Model Evasion: modification of the input to influence the model

- Model Poisoning: modification of the training data to add a backdoor

Introducing Gretel's Privacy Protection Filters

Many of the listed adversarial attacks require access to the model which at Gretel is tightly controlled. Only authenticated members of a project can access and run a synthetic model. To counter the remaining potential attacks, we've studied the nature of these attacks and have been able to isolate weak points in a model or dataset that are commonly exploited. We've countered these weak points with the following privacy protection mechanisms:

- Overfitting Prevention: This existing privacy mechanism ensures that the synthetic model will stop training before it has a chance to overfit. When a model is overfit, it will start to memorize the training data as opposed to learning generalized patterns in the data. This is a severe privacy risk as overfit models are commonly exploited by adversaries seeking to gain insights into the original data. Overfitting prevention is enabled using the `validation_split` and `early_stopping` configuration settings.

- Similarity Filters: Similarity filters ensure that no synthetic record is overly similar to a training record. Overly similar training records can be a severe privacy risk as adversarial attacks commonly exploit such records to gain insights into the original data. Similarity Filtering is enabled by the `privacy_filters.similarity` configuration setting. A value of `medium` will filter out any synthetic record that is an exact duplicate of a training record. A value of `high` will filter out any synthetic record that is 99% similar or more to a training record.

- Outlier Filters: Outlier filters ensure that no synthetic record is an outlier with respect to the training dataset. Outliers revealed in the synthetic dataset can be exploited by Membership Inference Attacks, Attribute Inference, and a wide variety of other adversarial attacks. They are a serious privacy risk. Outlier Filtering is enabled by the `privacy_filters.outliers` configuration setting. A value of `medium` will filter out any synthetic record that has a very high likelihood of being an outlier. A value of `high` will filter out any synthetic record that has a medium to high likelihood of being an outlier.

- Differential Privacy: We provide an experimental implementation of DP-SGD that modifies the optimizer to offer provable guarantees of privacy, enabling safe training on private data. Differential Privacy can cause a hit to utility, often requiring larger datasets to work well, but it uniquely provides privacy guarantees against both known and unknown attacks on data. Differential Privacy can be enabled by setting `dp: True` and can be modified using the associated configuration settings.

Synthetic model training and generation are driven by a configuration file. Here is an example configuration:

Understanding Privacy Protection Levels

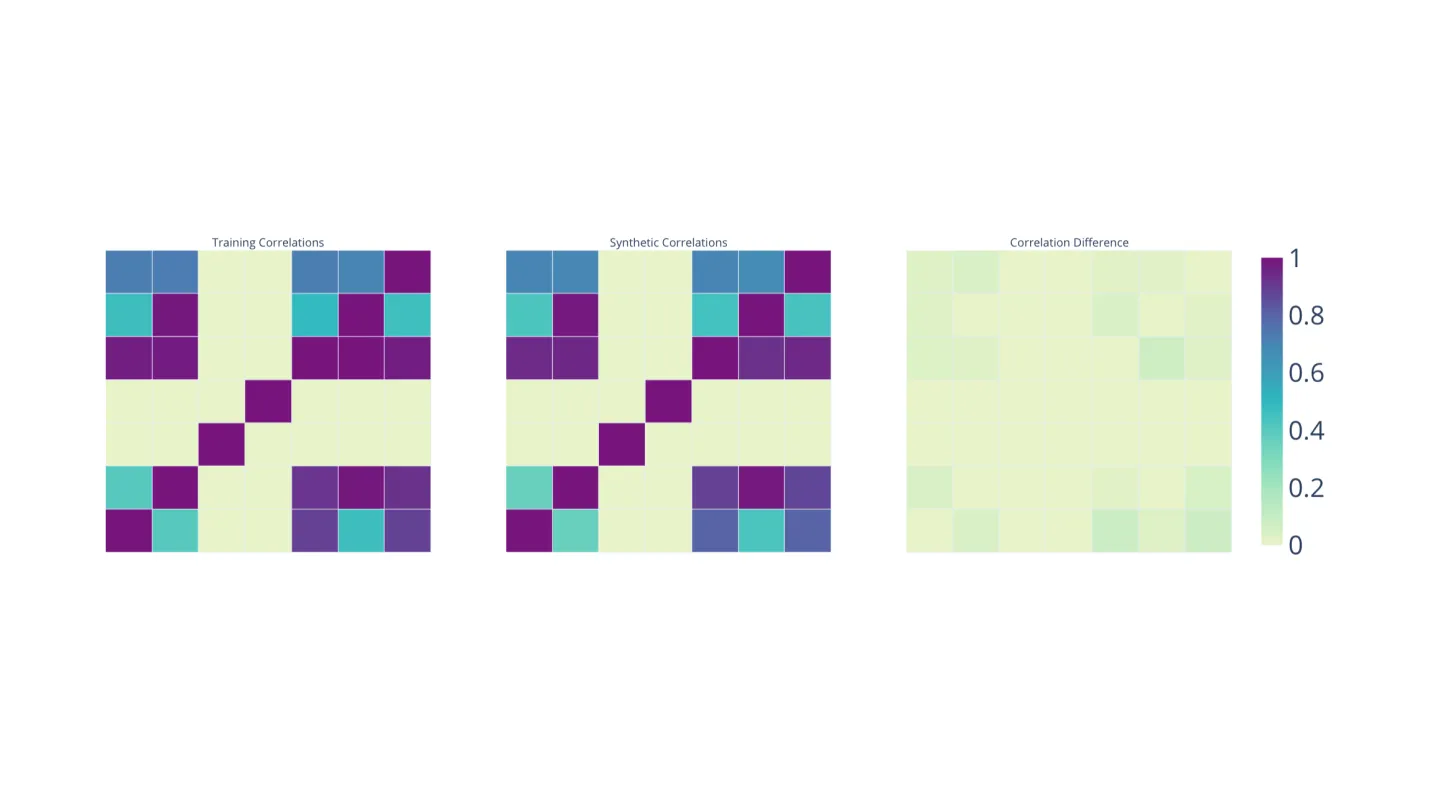

In our Gretel Synthetic Report, we score your Privacy Protection Level based on the number of privacy mechanisms you've enabled. At the very beginning of the report we provide a graphic showing your Privacy Protection Level:

We then show you just which privacy mechanisms you have enabled:

We also provide a handy matrix with the recommended Privacy Protection Levels for a given data sharing use case:

Give it a Try!

With Gretel's new Privacy Protection Mechanisms, your data is safer than ever. You can quickly share your data knowing that any sensitive information is well protected. Stay tuned for a part two blog on our Privacy Protection Mechanisms where we’ll step you through a notebook that assesses their impact on Machine Learning accuracy. As always we welcome feedback via email (hi@gretel.ai) or you can find us on Slack!