Got text? Use Named Entity Recognition (NER) to label PII in your data

In this post, we'll walk you through analyzing a Yelp reviews dataset and labeling people names and geographic locations

Is your unstructured data safe?

80% of data is unstructured, and yet most companies are unprepared when it comes to labeling and securing it. Those that do have processes in place often use laborious and error-prone manual reviews to secure their data, which is neither reliable nor scalable.

Gretel’s data classification APIs make it simple to identify and label sensitive content in both structured and unstructured data. Gretel can currently detect over 40 entity types including names, addresses, gender, birthday, and credentials. We do this through a combination of regular expression-based detections, custom detectors for entities based on FastText, and word embeddings. And we now also support spaCy's industrial strength Natural Language Processing (NLP) for identifying person names and locations.

Let’s get started!

Sign up for a free account using the Gretel Console, and then follow along below. Or use our Jupyter Notebook to get started! In this example, we'll walk you through labeling free text in a dataset of restaurant reviews using Gretel’s NLP and labeling APIs.

First, let's install all our python dependencies and configure the Gretel client.

Next, we'll connect to Gretel’s API service. You'll need your API key for this step.

We can now load a sample dataset containing PII into Pandas. Let’s use the Yelp Reviews dataset from HuggingFace’s datasets library.

Next, let's create a project in Gretel for labeling our data.

We'll use a very simple configuration file. NLP can be enabled in a single line. Note: Using spaCy can decrease model prediction throughput by up to 70%, that's why it's turned off by default.

The next step is to create the classification model and verify it to make sure it has been configured correctly.

Once the model has been configured and verified, all we have to do is run the full dataset through it.

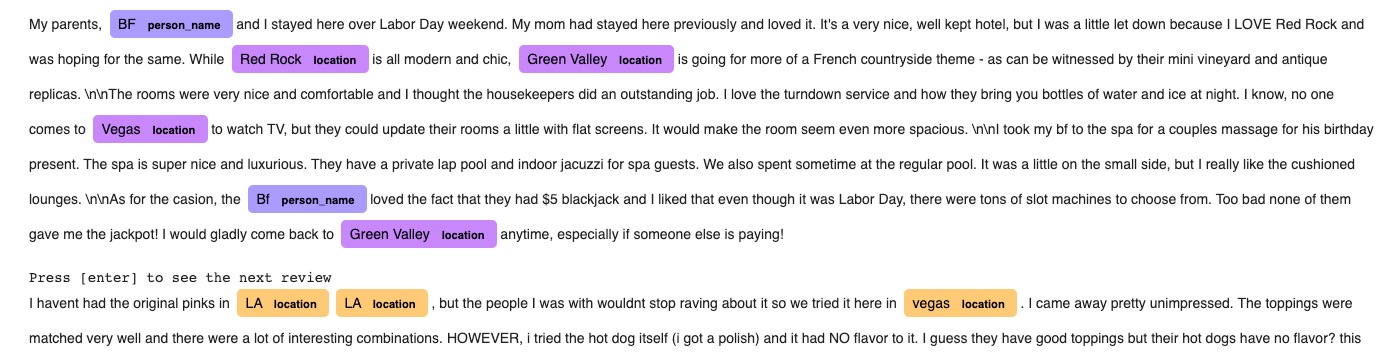

Here's a sample of the labeled reviews. If you're interested in how we color-coded the labels, check out our blueprint notebook in Github or run it in Google Colab. You can also use this blueprint to label your own datasets using NLP.

Did you know?

In addition to classifying sensitive data in unstructured text, you can also transform it. Gretel includes support for replacing names and addresses with fake versions, or securely hashing PII (personally identifiable information) to meet compliance requirements. Here's a 6-min video walkthrough on redacting PII from a dataset using the Gretel CLI, or get all the details from our docs. Don’t forget to set use_nlp: true in your config file.

As with all of Gretel’s APIs, you can get started quickly with SaaS, or deploy our NLP into your own cloud or VPC. Sign up to get your API key and see for yourself how easy it is.

What do you think?

Did you find this blog post interesting? Have any use cases you'd like us to write about? Email us, tweet us, or ping us on Slack. We'd love to hear from you!