Fine-tuning Models for Healthcare via Differentially-Private Synthetic Text

.png)

Organizations in specialized domains like healthcare, law, and banking often use unique acronyms, codes, terminologies, styles, and languages specific to their fields. Fine-tuning large language models (LLMs) on organizations’ data can greatly improve the quality of model responses by aligning them with domain-specific language and style.

However, organizations’ data often contain private and sensitive information, especially in healthcare. Fine-tuning models directly on original organization data can risk leaking private information from the training data, even if an attacker has only black-box access to the model (i.e., can query it through an API) [1].

In this blogpost, we show how to fine-tune models with differentially-private synthetic text to improve the quality of model responses, while ensuring sensitive information remains protected. The synthetic text is generated by Gretel’s GPT model fine-tuned with differential privacy based on your organization’s data [2, 3]. This approach preserves the style and domain-specific language used in your organization, while protecting data privacy. The synthetic text is then used for fine-tuning LLMs for generating responses.

Differential privacy (DP) [4] has become one of the most prominent privacy-enhancing technologies. By adding noise during the model training procedure, it provides formal guarantees that, with high probability, no training data will be ever extracted from the model. Training LLMs with differential privacy has been shown to be feasible [5], especially if one makes use of parameter-efficient fine-tuning (PEFT) techniques.

To demonstrate, we fine-tuned the Claude 3 Haiku model for generating clinical notes with the input of a transcript of conversation between a doctor and a patient. The input transcript can be obtained using services like AWS HealthScribe. The generated clinical notes summarize the consultations in a style and language unique to your organization. Such a fine-tuned model can save doctors precious time, considering that they often need to write the note themselves after each consultation.

Follow along:

Generating differentially-private synthetic text using Gretel's GPT

Gretel's GPT makes it easy for us to generate private synthetic text in just a few steps [6]. The following configuration creates a synthetic text generation job named final-run-dp, which fine-tunes a GPT model with the given data_source. The configuration also includes parameters for fine-tuning, as well as parameters for differential privacy.

schema_version: "1.0"

name: final-run-dp

models:

- gpt_x:

data_source:

- agbonnet_augmented_clinical_notes.csv

ref_data: {}

pretrained_model: gretelai/Phi-3-mini-128k-instruct

params:

batch_size: 8

epochs: 5

steps: null

weight_decay: 0.01

warmup_steps: 100

lr_scheduler: linear

learning_rate: 0.008723427557099954

max_tokens: 784

gradient_accumulation_steps: 32

peft_params:

lora_r: 8

lora_alpha_over_r: 1

target_modules:

- q_proj

- v_proj

- k_proj

- o_proj

- gate_proj

- down_proj

- up_proj

privacy_params:

dp: true

epsilon: 8

delta: autoWe can run a job that uses this configuration via the Gretel SDK:

from gretel_client import Gretel

CONFIG_YAML = """

<insert the YAML configuration here>

"""

gretel = Gretel(

project_name="new_project",

api_key="prompt",

endpoint="https://api.gretel.cloud",

)

gretel.submit_train(base_config=CONFIG_YAML)For the proof-of-concept, we used the AGBonnet/augmented-clinical-notes dataset. Some of the notes and conversations were fairly long, so we asked Claude 3 Haiku to shorten them to expedite the fine-tuning procedure. We formatted the data as follows:

*** TRANSCRIPT ***

Doctor: What brings you to the Outpatient department today?

Patient: I have discomfort in my neck and lower back, and I'm having trouble standing up straight.

Doctor: Can you tell me more about the discomfort?

Patient: I have pain in my neck, and my head is always turned to the right and upwards. I also have sideways bending in my lower back.

Doctor: How long have you been experiencing this?

Patient: About four months, since I started taking olanzapine for my bipolar affective disorder.

Doctor: I see. I'll order some tests and we'll discuss further.

*** NOTE ***

A 16-year-old girl presented with complaints of neck and lower back discomfort, restricted body movements, and abnormal posturing. She had been experiencing these symptoms for four months, which began after starting olanzapine for her bipolar affective disorder. The symptoms were characterized by sustained contraction of neck muscles, leading to abnormal head and neck movements, and required assistance with daily activities. Despite trying various medications, including trihexyphenidyl, propranolol, and phenargan, the symptoms persisted. The patient's symptoms worsened with reintroduction of olanzapine and improved with discontinuation of the medication. She was eventually started on tetrabenazine, which showed some response in improving her abnormal movements. The case highlights the development of tardive dystonia in an adolescent girl with bipolar affective disorder, likely caused by olanzapine, and emphasizes the need for caution in using this medication.Box 1: Actual sample from the original dataset, formatted according to the chosen template.

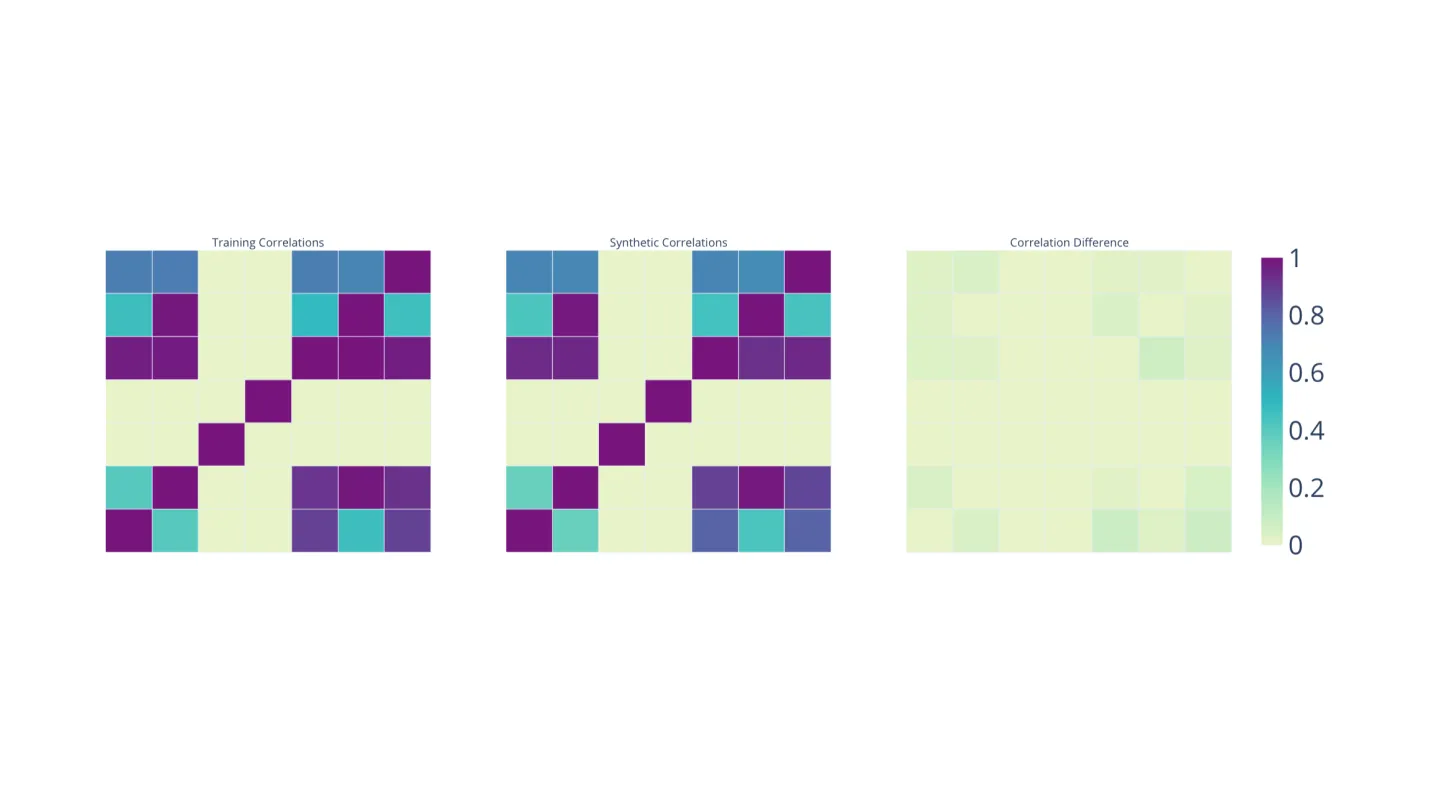

We used 10,000 such samples as input to the fine-tuning procedure in Gretel's GPT. The 5 epochs of fine-tuning took approximately 8 hours to complete. After training, the Gretel console provided the following data quality report, indicating the generated data closely resembles the original data. You can take a look at our documentation for more details on how the score is calculated.

Here is an example of what the synthetic text looks like:

*** TRANSCRIPT ***

Doctor: Good morning, sir. I'm Dr. X. You're here for a follow-up on your oral lichenoid ulcerative leukoplakia. Can you tell me about your symptoms and the treatment you've received?

Patient: I have a 3.5 cm ulcer on my tongue and roof of my mouth that's been growing for 2 years.

It started as a red, painless ulcer and has turned white and non-healing.

I was treated with antibiotics and steroid cream, but it didn't improve.

Doctor: Okay, I'd like to take a closer look at the lesion. I'd like to take a biopsy to confirm the diagnosis.

Patient: Okay, I understand.

Doctor: The biopsy shows that the lesion is benign. I'd like to refer you to a specialist for further treatment.

Patient: Okay, thank you.

Doctor: You're welcome. If you have any other questions or concerns, please don't hesitate to ask.

Patient: No, I think I understand everything. Thank you, doctor.

*** NOTE ***

A 57-year-old man presented to the Department of Dentistry and Oral Medicine at the Uppsala University Hospital, Uppsala, Sweden, with a 2-year history of a painless oral ulcer that had been slowly growing on his tongue and roof of his mouth.

The lesion was initially a red, painless ulcer that had changed to a white, non-healing leukoplakic ulcer over time.

The patient was treated with antibiotics and steroid cream, but the lesion did not improve.

A biopsy confirmed the diagnosis of oral lichenoid ulcerative leukoplakia (OLUL).

The patient was referred to the Department of Surgery for further treatment, and underwent a partial glossofaringectomy and excision of the affected area.

The lesion was successfully excised, and the patient was followed up for 2 years with no recurrence.Box 2: Private synthetic sample generated with Gretel's GPT, by fine-tuning a LLM with differential privacy.

We observe that the note contains details that are not in the conversation, as expected, since the doctor will often use other information to write it. This is not necessarily an issue, though, we could instruct the downstream LLM to exclude any such details.

We generated 10,000 synthetic samples, which were used to fine-tune the downstream LLM on Amazon Bedrock.

Using synthetic text to fine-tune model on Amazon Bedrock

For the downstream task, we have chosen to fine-tune Claude 3 Haiku on Amazon Bedrock. We first formatted the data in the required JSON format.

import json

pattern = r"^\*\*\* TRANSCRIPT \*\*\*\n\n(.*)\n\n\*\*\* NOTE \*\*\*\n\n(.*)$"

{"system": "<system message>","messages":[{"role": "user", "content": "<user query>"},{"role": "assistant", "content": "<expected generated text>"}]}

processed, jsonl = 0, ''

for t in synth_data['text']:

m = re.match(pattern, t, re.DOTALL)

if m:

instr = "You are given the transcript of a conversation between a doctor and a patient, and will generate a clinical note summarizing that conversation."

prompt = m.group(1)

completion = m.group(2)

sample = {"system": instr, "messages": [{"role": "user", "content": prompt}, {"role": "assistant", "content": completion}]}

jsonl += json.dumps(sample) + '\n'

processed += 1

print(f'Processed {processed} valid entries')

with open('ftdata.jsonl', 'w') as fid:

fid.write(jsonl)Box 3: Data converted to JSON format

We then uploaded all the 10,000 synthetic samples to Amazon S3, and created a fine-tuning job on the AWS Console (see Figure 2). After about 7 hours, the fine-tuning was complete.

We evaluated the fine-tuned Haiku model on the original dataset. We additionally instructed the model to replace gender and age with tags. For instance, when providing the fine-tuned model with the transcript in Box 1, it returns the following output

A [GENDER], [AGE] patient presented to the Outpatient department with discomfort in the neck and lower back, accompanied by sideways bending of the spine.

The patient had a history of bipolar affective disorder and was taking olanzapine, which was associated with the onset of the symptoms.

Physical examination revealed a slight sideways bending of the spine, and the patient was unable to stand up straight.

Imaging studies, including X-ray, MRI, and CT scans, showed bilateral spondylolysis and spondylolisthesis, as well as degenerative changes in the spine.

The patient was treated with oral steroids and physical therapy, and the symptoms improved over time.

The patient was discharged with a prescription for oral steroids and physical therapy, and was advised to follow up with the treating physician.

Box 4: Note generated by the fine-tuned Haiku model, given the transcript in Box 1.

As expected, this generated note is quite similar to the original one, especially in terms of style and organization. Our baseline is the base model, which produces something quite different, although still factual:

Clinical Note:

Patient: [AGE], [GENDER]

Presenting Complaint: Neck discomfort, lower back pain, and difficulty standing upright.

History of Present Illness:

The patient has been experiencing neck pain, with their head turned to the right and upwards, as well as sideways bending in the lower back, for the past four months.

The patient reports that these symptoms began after starting olanzapine for their bipolar affective disorder.

Physical Examination:

The patient's neck appears to be turned to the right and upwards, and there is evidence of sideways bending in the lower back.

Further evaluation is needed to determine the underlying cause of these symptoms.

Plan:

The doctor has ordered additional tests to investigate the cause of the patient's symptoms.

A follow-up appointment will be scheduled to discuss the results and determine the appropriate treatment plan.

Box 5: Note generated by the base model, given the transcript in Box 1. Notice how it looks very different from the original note.

We repeat this inference step, using both the fine-tuned and base models for all samples in the original dataset. We then compare the generated notes with the original notes. Note that the original dataset is unknown to the fine-tuned model, which has only seen private synthetic text, so there is no "data leakage".

Evaluating model on downstream task

To compare generated notes with original notes, we used two different metrics:

- Gretel's Synthetic Text Quality Score [7], a metric that compares two distinct sets with respect to some text

- semantic similarity using text embeddings

- structural similarity, by analyzing properties such as length and word count.

LLM-as-a-judge – We asked a different model (Claude 3.5 Sonnet) how well the two notes compare in terms of meaning, style and organization. We used the following rubric for the LLM-as-a-judge evaluation:

I will provide two clinical notes, note 1 and note 2, and I want you to compare them in the following aspects:

* Meaning: note 2 conveys the same meaning as note 1.

* Style: note 2 is written in the same style as note 1, considering potential quirks and mannerisms.

* Organization: note 2 is organized in the same fashion as note 1, in terms of sections and paragraphs.

For each of these aspects, I want you to produce a rating between 1 and 5, depending on how truthful are the statements above. Do not say anything else besides the ratings.

Box 6: Rubric used in LLM-as-a-judge.

In Figure 3, we can see that samples generated by the fine-tuned model perform significantly better than the base model, especially in terms of structural similarity. Figure 4 shows that while both models have similar results in terms of meaning; the fine-tuned model outperforms the base model in terms of style and organization.

Conclusion

Using differential privacy guarantees that, with high probability, the synthetic dataset we used for the downstream task does not contain any sensitive information from the original dataset. This approach effectively unlocks the data for use in enhancing model utility, while providing strong assurances that patient privacy is protected.

References

- Carlini, Nicholas, et al. "The secret sharer: Evaluating and testing unintended memorization in neural networks." 28th USENIX security symposium (USENIX security 19). 2019.

- Yue, Xiang, et al. "Synthetic Text Generation with Differential Privacy: A Simple and Practical Recipe." Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2023.

- Ramaswamy, Lipika and Manoel, Andre, "Generate Differentially Private Synthetic Text with Gretel GPT", 2024.

- Dwork, Cynthia. "Differential privacy." International colloquium on automata, languages, and programming. Berlin, Heidelberg: Springer Berlin Heidelberg, 2006.

- Abadi, Martin, et al. "Deep learning with differential privacy." Proceedings of the 2016 ACM SIGSAC conference on computer and communications security. 2016.

- Yu, Da, et al. "Differentially Private Fine-tuning of Language Models." International Conference on Learning Representations. 2022.

- Emadi, Marjan and Pang, Nicole, "Measure the utility and quality of GPT-generated text using Gretel’s new text report", 2023.

Authors

Andre Manoel, Lipika Ramaswamy, Maarten van Segbroeck, Gretel

Qiong Zhang, Shashi Raina, AWS