Fast data cataloging of streaming data for fun and privacy

The Metastore

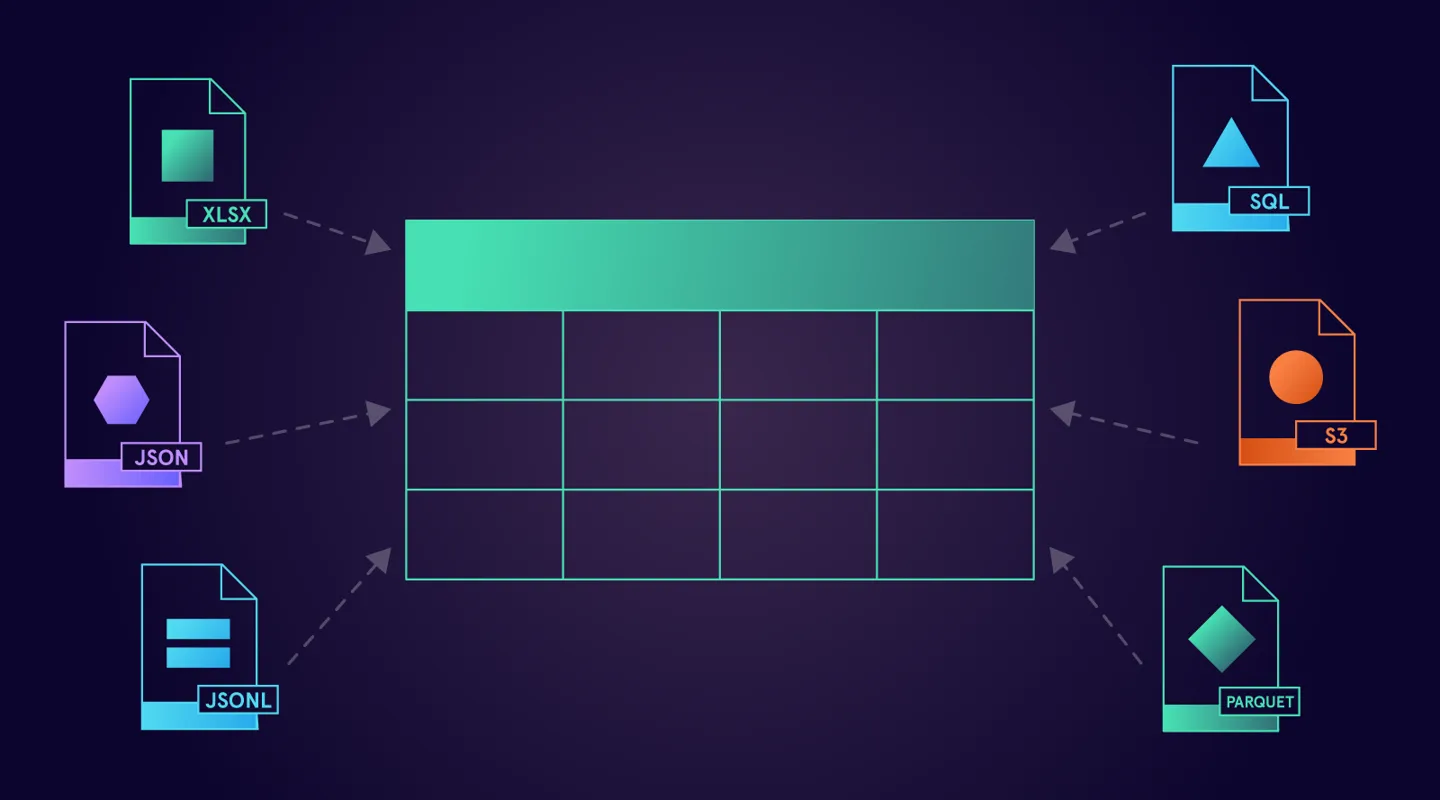

Gretel’s APIs, by default, automatically build a metastore that makes it easy to understand what is inside of your data. Many developers are used to working with metastores that are provided by technologies like Hive, and incorporated into services like AWS Glue. The metadata provided here is extremely useful, but not very friendly to work with. At Gretel, we strive to make everything accessible through our REST API, a concept virtually every developer is familiar with.

Once you start sending records to the Gretel API, indexing to the metastore automatically begins. In this case, we were streaming several hundred of the GBFS free_bike_status.json feeds into our API. The most obvious field from the specification is “bike_id”, as this is most likely a primary identifier.

Pretty straight forward here, most of the values are strings, we’ve seen over 17 million records with this field, with an approximate unique count just over 1.1 million. Our initial hunch is 1.1 million unique IDs is a lot of e-bikes and scooters out there…

Understanding what other fields in your data that can correlate to your primary IDs / keys is a vital part of privacy introspection. Remember, even if a unique ID is removed, there could very well be another field or combination of fields that provides a similar level of attribution (see the Netflix Prize Challenge). One technique we use to find other potentially identifiable fields is comparing field names using word embeddings to infer relationships

By creating Word2Vec and FastText models trained on the schemas of thousands of open-domain databases and tables, we’re able to do fast comparisons between schema field names to clue us in to other fields we may want to pay attention to. This is great because it gives us clues without ever having to analyze the field contents! Comparing all of the other fields in the records yields two closely related fields (based on field name): “is_ebike” and “jump_vehicle_name.”

Looking at “is_ebike” shows us this is most definitely a boolean value (which is almost always set to one value so basically a constant). It’s also not very common, with only 11k entries, so probably only being reported infrequently.

Moving on to the next field with the metastore tells a slightly different story.

So we see an overall lower number of fields with this name, not surprising, as this is only one operator of several sending location data. But what’s more surprising is the lower overall approximate unique count of field values relative to the total field count.

When comparing the percentage of unique values between “bike_id” and “jump_vehicle_name” at this point in time, it is approximately 6.6% vs 0.4% respectively. As we discuss below, the p_unique value for “bike_id” will generally level off while the value for “jump_vehicle_name” would infinitely approach 0 as time moves on.

Deep Dive

We decided to take a closer look and pull some of the raw data and see what was going on with these IDs. With respect to the “bike_id” fields, we found that in general, over time, the cardinality of this field was steadily increasing. It turns out that some data producers were actually generating ephemeral “bike_id” values on a steady interval. This would explain why the cardinality was increasing steadily.

When we isolated the “jump_vehicle_name” field, however, the cardinality over time was basically linear. To us, this indicated that this field probably has a one-to-one mapping with an actual vehicle.

This field was also only 6-characters long, more friendly for a small screen, while the “bike_id” field usually appeared to be some type of UUID.

Then we checked the actual Uber app and found that jump_vehicle_name field values were the actual scooter names that show up in the Uber application

Side Note: If you’re ever in Baltimore definitely check out One-Eyed Mike’s (in the map above). Awesome row home converted to a restaurant and bar. Great cocktails and awesome food.

By generating snapshots of the GBFS feed, it becomes fairly straight forward to create a “ride record” that shows a change in a scooter’s GPS location based on a previous value. Plugging this ID into that dataset shows some unique rides. By computing the geodesic distance between coordinates we can also create a filter to show changes in location that are above a certain value (to avoid GPS drift).

The privacy implications here are real. Observing bikes through the app (or getting the vehicle name from the bike itself) combined with aggregated feed data could allow ride reconstruction for specific individuals as they rent scooters.

Disclosure

When deciding to suggest the removal of this data we originally looked at HackerOne but ran into a roadblock. None of us are super active HackerOne users so by default, we don’t have any “signal” (aka street credit) on that system to actually submit anything. Then we decided to do what any other developer would do, check out GitHub issues! Turns out there had already been some discussion around “bike_id” randomization and there was a small subset of vendor contributors active on the issue trackers.

This felt like the right place because fundamentally we wanted to ensure visibility and that we were reaching the right audience. We posted the information as a new issue, and within minutes we had a response, and within hours the data was removed from the feeds. Clearly there was already someone empowered with decision making monitoring this GitHub repository and we were able to provide them the right insight to make a change happen same-day.