How to Improve RAG Model Performance with Synthetic Data

Living in today's AI-driven world, you can't escape the buzz surrounding one of the most efficient methods to tweak language models and introduce new knowledge sources: Retrieval Augmented Generation (RAG). Optimizing these models with trusted data is key to their successful deployment and scalability in production environments. In this blog, we highlight effective strategies for leveraging high-quality synthetic data to improve RAG model performance, resulting in potential cost, resource, and time savings over traditional data acquisition methods.

Ready to join 125k+ developers in the synthetic data revolution? Get started for free using Gretel’s synthetic data platform today.

What is Retrieval-Augmented Generation?

Retrieval Augmented Generation (RAG) combines the capabilities of LLMs with contextual information retrieval from external data sources to generate more accurate and enriched responses. It provides a cost-efficient and flexible way to introduce new knowledge sources to LLMs and improve domain-specific knowledge, generating more trustable and reliable responses.

What advantages do RAG models offer over traditional LLMs?

LLMs are a wildly popular method of using generative AI to produce novel text. They have been applied across industries to a variety of use cases, including semantically relevant information retrieval, code generation, sentiment analysis, text summarization, and content classification. Despite these benefits, organizations face a few common challenges when using LLMs, including:

- The risk of hallucinations or presenting inaccurate or false information.

- Generating outdated information that only reflects insights from stale training data.

- Relying on non-trusted resources to produce seemingly credible answers.

- Expensive infrastructure and engineering costs associated with LLM fine-tuning

RAG models have proven to be more scalable and cost-efficient than traditional LLM fine-tuning methods for a domain-specific task. Because RAG models are sourcing contextually relevant answers from a trusted knowledge source rather than the entire web, they tend to increase trustworthiness and reduce errors in LLMs. The benefits of RAG models include:

- Contextual and Domain Relevance: Integrating external data, improves the contextual relevance of responses. RAG models excel in specialized queries by accessing relevant external knowledge, surpassing LLMs' training limitations.

- Reduced Hallucinations: Dynamic information updates in RAG models, mitigates the risk of generating incorrect content.

- Cost-efficient Scalability and Customization: RAG models offer scalable knowledge integration and customizable response generation without the need to retrain the core LLM.

- Versatile and Flexible Q&A Approach: RAG models offer a modular generation of Q&A pairs using different, specialized models, enhancing accuracy and effectiveness of responses.

How can synthetic data augment RAG model performance?

Building RAG models goes beyond traditional Large Language Model Operations (LLMOps) by incorporating steps that ensure high-quality responses from the newly integrated knowledge sources. The journey goes from gathering and indexing of data, towards model fine-tuning and rigorous testing phases. These processes involve specific optimization steps for retrieval and response evaluation to ensure the model's adaptability and reliability across diverse applications.

Data collection

- The challenge is not just to collect a vast amount of data but also about curating the right mix of quality, variety, and relevance. For RAG models, this is crucial since the data acts as a trusted reservoir from which the model will retrieve the context to respond to new queries. By leveraging synthetic data, developers can kick-start RAG model development, especially in scenarios where real data is scarce or sensitive. This approach not only enriches the model with domain-specific knowledge but also embeds diversity and ethics into its core, ensuring balanced and contextually aware responses.

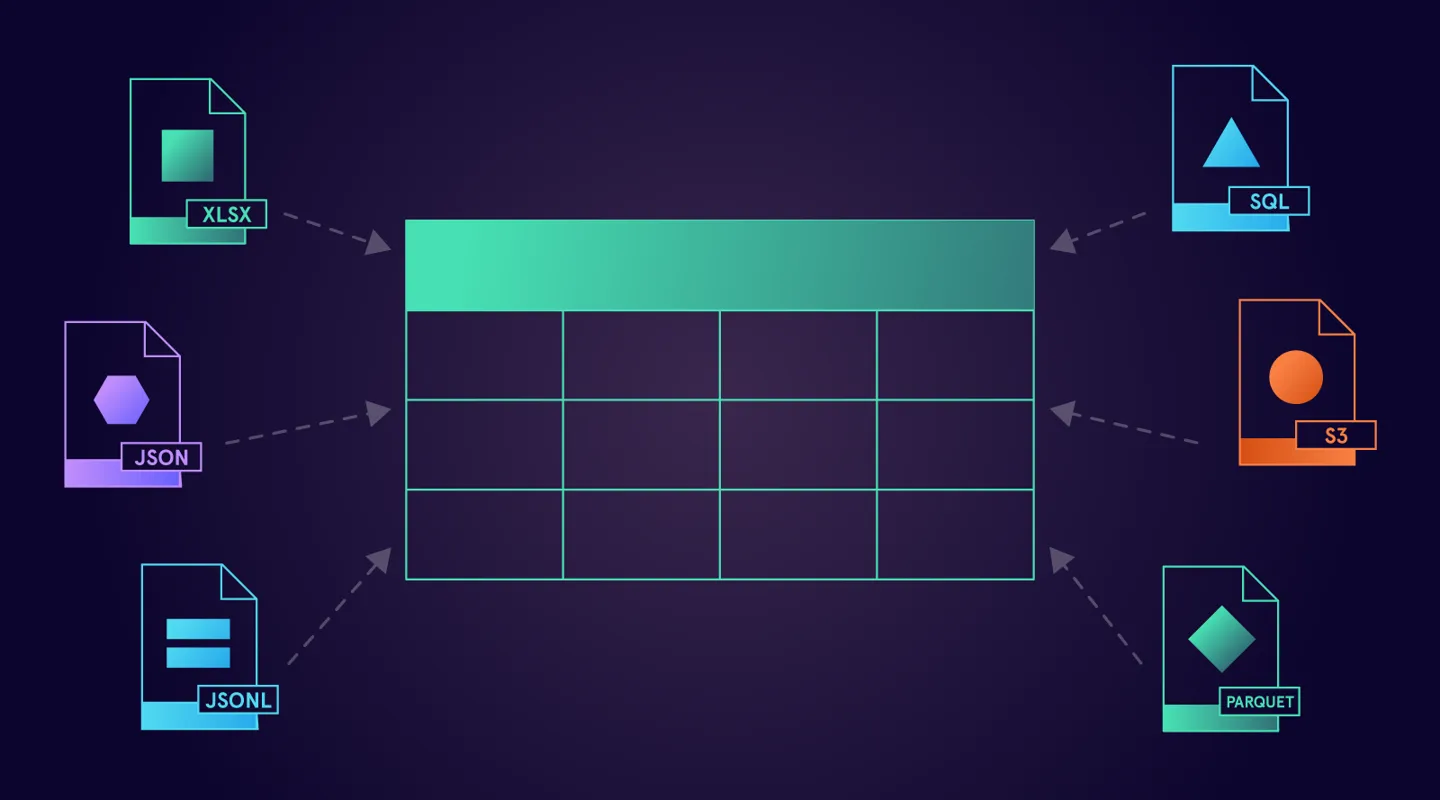

Expanding Knowledge Sources and Indexing

- Expanding the knowledge base and the refinement of indexing strategies are essential pre-processing steps to further enhance the model's to retrieve the right context. Synthetic data plays a pivotal role here to fill in the informational gaps and refine indexing strategies.

Retrieval

- The retrieval process is a critical component in RAG with the goal to provide the right contextual information to enrich response generation. Synthetic queries could be instrumental during testing and boosting the efficacy of semantic search algorithms to cover a wide array of intents and complexities.

Fine-tuning Large Language Models

- LLM fine-tuning allows adjusting the RAG model to suit specific domains and tasks while further optimizing its performance. Techniques like differential privacy and prompt augmentation with synthetic data ensure the model's tuning is both privacy-conscious and adapted to specialized scenarios.

RAG Responses

- The integration of synthetic data in RAG responses, transform these from simple answers to rich, contextually nuanced narratives. Additional context can be provided through adding synthetic tabular data to provide example scenarios or enhanced visuals and analytics.

Evaluation and Testing

- Generalizability is crucial for RAG models to guarantee they always offer precise and valuable answers. Testing them across various prompt styles and responses ensures they can handle a diversity of inputs consistently. By using synthetic data, the limitations of the RAG model can be explored and evaluated in terms of its performance in edge cases, hallucination detection, and against adversarial challenges, ensuring the model’s robustness across these scenarios.

Ready to scale your RAG model with synthetic data?

As demonstrated above, synthetic data provides significant value to developers building RAG systems across the entire LLMOps lifecycle, resulting in significant savings over traditional data acquisition methods while providing realizable gains in model performance.

We invite you to join over 125k developers generating synthetic data with Gretel today. Remember, it’s free to sign up for a Gretel account. Don’t forget to share your Tabular LLM learnings with the rest of the AI community or reach out to the Gretel team for support in our community Discord. Happy synthesizing!