Synthetic Image Models for Smart Agriculture

Since our post on using diffusion models for document synthesis, synthetic image models have drastically improved, especially in the domain of text-to-image generation. After all of the excitement, particularly around creative use cases, you might wonder, “what can I do with these advancements?”

There are various potential applications, and generative image models can advance solutions for a range of concrete enterprise problems. In this post, we’ll walk through one: using synthetic image models to improve a computer vision application. This is valuable because we show how synthetic image models can be used to reduce costs and speed up iteration time. Specifically, we’ll train a computer vision classifier for autonomous farming. We’ll then show that the classifier fails with changing weather patterns like snow. Finally, we will use a synthetic image generation model to efficiently generate snowy plant images to boost the training dataset and the computer vision classification accuracy by combating data drift.

Improvements can come in many forms. For context, a general computer vision workflow will include data collection, curation, and cleaning before even approaching model training and validation. As we’ve discussed in our post describing the machine learning life cycle, synthetic data can reduce the cost of these activities and improve privacy without sacrificing quality. In this post, we’ll focus on how synthetic images can improve the computer vision classification system’s ability to handle unexpected events and improve accuracy in varied conditions.

Autonomous farming with computer vision

Imagine you are an engineer for a company that produces automated farming equipment for agribusiness. The company is replacing or augmenting tractors, feeders, bailers, and even weeders with autonomous technologies. As part of this effort, you are designing a detector to distinguish crops from weeds. Farm equipment will use this detector to eliminate weeds while saving precious crops.

To make this more concrete, we’ll look at charlock (i.e., wild mustard) as the weed and maize (i.e., corn) as the crop. These plants have overlapping hardiness zones, and unwanted charlock growth can interfere with the corn harvest.

For this example, we’ll outline and create a computer vision system robust to unexpected environmental variations. Specifically, we’ll use a synthetic image model to generate snow-covered plants that can be used as training data to improve the generalization and performance of our automated agricultural equipment.

Baseline ML model architecture and results

We’ll use a neural network as the backbone for our detection algorithm. This means that we’ll need to choose a neural network architecture and train it to classify images coming from autonomous farming equipment.

A popular and performant computer vision architecture is the ResNet, which is easy to train, stable, fast, and efficient. To train a ResNet to identify between corn and wild mustard, we’ll show it hundreds of images of each that we have meticulously collected and labeled manually. This process, while both financially expensive and time-consuming, will give a great initial boost in accuracy to our weeding machine.

After our network is trained, we look at the results and see solid performance. The ResNet18 model accuracy when trained on real data and evaluated with real data is 96.75%. Excellent!

But there's room for improvement if we collect more data and tune our network, or change the architecture. As a proof of concept, however, it's quite good at distinguishing corn and charlock.

Handling a changing environment with synthetic data

After this success, we deploy the model into our automatic weeding machines and sit back to enjoy a job well done. However, imagine we have an unexpected snowstorm in the fields, and now all the plants are covered in a thin layer of snow.

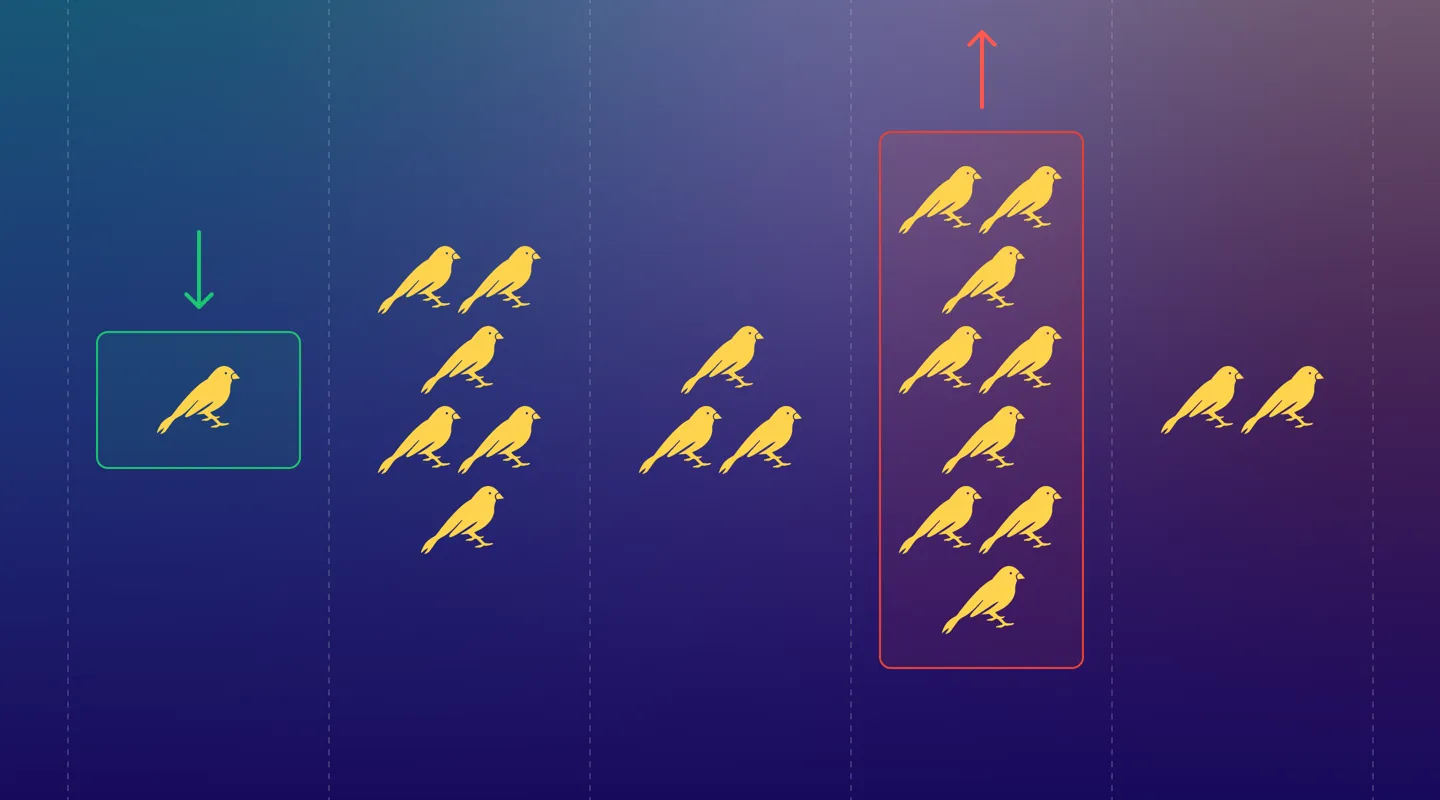

Our classifier performance drops to 82.5% when deployed in this unexpected situation. As we discussed in our ML life cycle post, the data drift in our environment has drastically reduced the performance of our data-dependent ML system. If left running, our automated systems could start pulling up corn and leaving behind harmful weeds. This would negatively impact our crop yield and be financially costly. As such, we don’t have time to collect hundreds of high-quality images, send them to be manually labeled, retrain our classifier, and redeploy. We need to train and deploy a solution now.

Synthetic data is a great answer to reduce data collection costs while maintaining high utility for downstream machine learning applications. This case is no different! Instead of collecting images, we can synthesize images of corn and charlock with snow on them and retrain our classifier with those synthetic images included in our training data. As other researchers have suggested, this should boost our classifier’s performance.

Overview of Synthetic Image Generation and Text-to-Image Models

An oft-cited prediction by Gartner is that 60% of all data will be synthetic by 2024. So far, structured and unstructured synthetic data (e.g., tabular and text) has driven enormous business value, enabling privacy-preserving collaboration and ML/AI applications.

Over the past few years, we’ve seen vast improvements in other data types like audio, images, and video. Specifically, with the advent of systems such as DALLE-2, Imagen, eDiffI, Make-a-Scene, and more, we have seen a drastic improvement in a specific type of synthetic data generation system. This system, commonly referred to as text-to-image, allows a user to input text description, and the generative model will output an image matching that text.

This paradigm has proven immensely useful for creative applications such as concept art and storyboarding. However, the ability of the system to translate a text description into an image is limited by the original training data. For example, if you want to use the open-source Stable Diffusion model for the autonomous farming application and try to generate Charlock or Maize either in normal conditions or in a snowy environment, you’ll find the model ill-suited for such a task.

While these models are powerful, they often cannot be used directly off the shelf for enterprise applications. We need to customize these models to work for our use cases. The process of customization is called fine-tuning.

It takes about 15 minutes for Gretel’s synthetic image model to learn a new concept on a single NVIDIA A100 GPU. After we customize the model, we can generate synthetic images of our plants in a variety of different conditions. For example, all of the plant images in the blog are synthetic!

For our inclement weather example, we need to generate images of our plants in the snow. It takes about an hour to generate 2,000 images of corn and charlock in the snow — such as those in Figure 2 — on a single NVIDIA A100 GPU with 40GB RAM. By augmenting our training set with these images, we see the performance return to acceptable levels. The ResNet18 model trained on real and synthetic images, including snowy images, has a 97.75% accuracy.

Conclusion

In this post, we explored how to use a synthetic image generation model to improve the ability of our plant classifier to handle adverse weather conditions, resolving an issue created by data drift. At Gretel, we’re building a synthetic data platform that simplifies generating, anonymizing, and sharing access to data for thousands of engineers and data science teams worldwide. Even if you have no data or limited data, Gretel can help you start building machine learning and AI solutions.