Building a Robust RAG Evaluation Pipeline with Synthetic Data 🚀

As more teams deploy Retrieval-Augmented Generation (RAG) systems to production, a critical challenge emerges: how do you know your system will handle the diverse range of queries it might face in the wild? Will it gracefully handle edge cases? Can it recognize when it shouldn't answer? 🤔

In this hands-on guide, we'll walk through building an end-to-end evaluation pipeline for RAG systems using synthetic data generation. Instead of spending weeks manually crafting test cases, we'll use Gretel's Data Designer to automatically generate diverse, challenging evaluation scenarios. We'll then use these to quantitatively assess RAG performance across different configurations. Creating evaluation datasets is one of the most popular ways we see customers getting started with synthetic data at Gretel - it's a practical, immediate-value use case that can save weeks of manual work while improving test coverage.

🔥 Want to try it yourself? Run the complete notebook in Colab or modify to evaluate your own RAG system! If you want a video version of this walkthrough, check out the demo below.

The Challenge with RAG Evaluation

When deploying RAG systems, teams often focus heavily on the happy path - queries that perfectly match their test cases. But production is messy. Users ask unexpected questions, seek information that spans multiple documents, or ask about topics outside your knowledge base entirely.

A robust evaluation should test:

- Simple fact lookups vs complex multi-hop reasoning

- Questions with clear answers vs those requiring nuance

- Queries that cannot be answered from the context

- Different types of user intents and complexity levels

Building this test set manually is time-consuming and often misses important edge cases. Let's automate it.

Building the Evaluation Pipeline ⚡

Our pipeline has four main components:

- 📥 Data ingestion and chunking

- 🔌 Vector store and retrieval setup

- 🤖 Synthetic QA pair generation

- 📊 Evaluation and visualization

Let's walk through each step with code you can adapt for your own use case.

1. Data Ingestion and Processing

For this example, we'll use documentation from recent AI trending projects on GitHub. This is an ideal test set because the content isn't part of most LLMs' training data, allowing us to truly evaluate the RAG system's retrieval and in-context learning capabilities rather than just testing the LLM's general knowledge.

Using LangChain's document loaders and text splitters (though you could use any framework of your choice), we load these documents and split them into chunks with configurable size and overlap. The specific chunking strategy can significantly impact RAG performance, which we'll evaluate later in this post.

2. Setting Up the Vector Store

Next, we'll set up our retrieval system. We'll use FAISS (Facebook AI Similarity Search) as our vector model and store - it's a popular open-source library that efficiently indexes and searches dense vectors. While we're using FAISS here, you could easily swap this out for other vector stores like Pinecone, Weaviate, or Milvus depending on your production needs.

Similarly, while we're using LangChain for this example, frameworks like LlamaIndex and NVIDIA’s NeMo Retriever offer comparable functionality. Here's how we set up our retrieval system:

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

def build_vector_store(chunks, embedding_model_name="sentence-transformers/all-MiniLM-L6-v2"):

embeddings = HuggingFaceEmbeddings(model_name=embedding_model_name)

vectorstore = FAISS.from_documents(chunks, embeddings)

return vectorstore

def answer_with_rag(question: str, vectorstore, num_retrieved: int = 5):

retrieved_docs = vectorstore.similarity_search(question, k=num_retrieved)

context = "\n\n".join([f"Doc {i}: {doc.page_content}"

for i, doc in enumerate(retrieved_docs)])

messages = [

{

"role": "system",

"content": "You are an expert answering questions based solely on the provided context. If the answer cannot be found in the context, respond with 'Not answerable'."

},

{

"role": "user",

"content": f"Context:\n{context}\n\nQuestion: {question}\n\nAnswer:"

}

]

response = openai.chat.completions.create(

model="gpt-4o-mini",

messages=messages,

max_tokens=512,

temperature=0.2,

)

return response.choices[0].message.content.strip(), retrieved_docs

3. Synthetic Data Generation with Gretel

This is where we address one of the biggest challenges in RAG evaluation: creating a diverse, comprehensive test set. Manually writing test cases is not only time-consuming but often misses important edge cases or reflects our own biases about what we think users might ask.

Gretel's Data Designer offers a solution: using an agent-based architecture to generate synthetic question-answer pairs that systematically test different aspects of your RAG system. What makes this approach particularly powerful is our ability to control the generation process through carefully designed seed columns and a full synthetic pipeline to evaluate quality of generated data:

from gretel_client.navigator import DataDesigner

from pydantic import BaseModel, Field

class QAPair(BaseModel):

question: str = Field(...)

answer: str = Field(...)

class EvalMetrics(BaseModel):

context_relevance: int = Field(..., ge=1, le=5)

answer_correctness: int = Field(..., ge=1, le=5)

def setup_data_designer(contexts):

designer = DataDesigner(

api_key=GRETEL_API_KEY,

model_suite="llama-3.x",

special_system_instructions="Generate diverse and challenging evaluation questions for a RAG system."

)

# Add seed columns to control generation

designer.add_categorical_seed_column(

name="query_complexity",

values=[

"Find a single specific fact",

"Analyze or synthesize multiple pieces of information",

"Handle missing or ambiguous information"

]

)

designer.add_categorical_seed_column(

name="query_intent",

values=[

"Find specific information",

"Understand a broader topic",

"Verify or check information",

"Solve a specific problem"

]

)

designer.add_categorical_seed_column(

name="answerability",

values=[

"Information is directly available in context",

"Information requires combining multiple context pieces",

"Information is not available in context"

]

)

# Generate QA pairs and evaluation metrics

designer.add_generated_data_column(

name="qa_pair",

llm_type="judge",

generation_prompt=(

"Context:\n{context}\n\n"

"Generate a question where the user needs to {query_complexity}. "

"They are trying to {query_intent}. "

"For this question, the {answerability}."

),

data_config={"type": "structured", "params": {"model": QAPair}}

)

return designer

# Generate evaluation dataset

designer = setup_data_designer(contexts_for_gretel)

batch_job = designer.submit_batch_workflow(num_records=200)

eval_dataset = batch_job.fetch_dataset(wait_for_completion=True)

4. Evaluation and Visualization

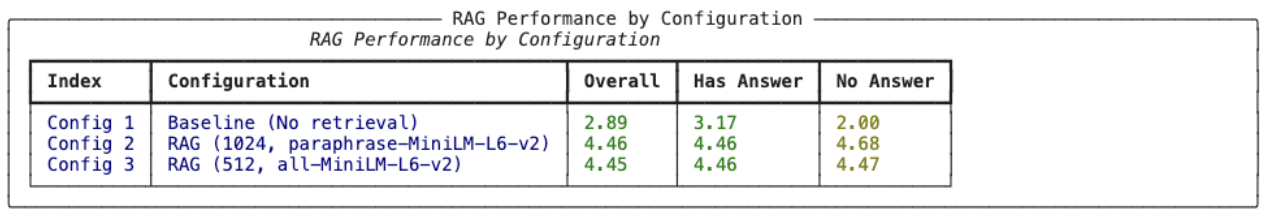

We evaluate our system by comparing three configurations:

- Baseline (No retrieval): The LLM answers using only its internal knowledge.

- RAG (512 tokens): Documents are split into 512-token chunks (with 50 tokens overlap) and processed using the all-MiniLM-L6-v2 embedding model.

- RAG (1024 tokens): Documents are split into 1024-token chunks (with 100 tokens overlap) and processed using the paraphrase-MiniLM-L6-v2 embedding model.

Using synthetic QA pairs generated via Gretel, we test each configuration on a range of query types—from straightforward fact lookups to more ambiguous, complex questions. Each response is scored for answer correctness and context relevance. Finally, we aggregate and visualize these metrics (e.g., with bubble charts) to quickly identify performance gaps and trade-offs.

configurations = [

{

"name": "RAG (512, all-MiniLM-L6-v2)",

"chunk_size": 512,

"chunk_overlap": 50,

"embedding_model": "sentence-transformers/all-MiniLM-L6-v2"

},

{

"name": "RAG (1024, paraphrase-MiniLM-L6-v2)",

"chunk_size": 1024,

"chunk_overlap": 100,

"embedding_model": "sentence-transformers/paraphrase-MiniLM-L6-v2"

},

{

"name": "Baseline (No retrieval)",

"baseline": True

}

]

def evaluate_rag_answer(true_answer, generated_answer):

messages = [

{

"role": "system",

"content": "Evaluate the generated answer against the ground truth..."

},

{

"role": "user",

"content": f"Ground Truth: {true_answer}\nGenerated Answer: {generated_answer}"

}

]

response = openai.chat.completions.create(

model="gpt-4o-mini",

messages=messages,

temperature=0.1,

response_format={"type": "json_object"}

)

return json.loads(response.choices[0].message.content)

# Run evaluations and collect results

results = []

for config in configurations:

avg_score, details = run_evaluation_on_config(config, eval_dataset)

results.extend(details)

# Visualize results

results_df = pd.DataFrame(results)

visualize_rag_performance(results_df)

Understanding RAG Evaluation Results

Let's look at how our three configurations performed:

.png)

The results highlight several important considerations for your own RAG system:

- Chunk Size Impact: Your optimal chunk size may vary based on your document structure and typical query patterns. Our evaluation framework lets you quantify these trade-offs.

- Embedding Model Selection: Different embedding models excel at different types of semantic similarity. By testing multiple models, you can find the best fit for your use case.

- Retrieval Strategy: The number of chunks to retrieve and how to combine them can significantly impact performance. Our framework helps you tune these parameters systematically.

As you recall, we generated descriptive seeds or tags with our synthetic evaluation examples, which we can use to understand how each configuration performs in a more human centric context. We’ll use a bubble chart to dive in a level deeper on visualizations to help compare and contrast performance against tasks like “Finding specific information”, “Understanding broader topics”, and “Handling ambiguous information” that can help really show where different configuration settings shine.

.png)

Best Practices for RAG Evaluation

Based on our experiments, here are key recommendations:

- Generate Diverse Test Cases: Use synthetic data generation tools like Gretel to create a wide range of evaluation scenarios.

- Test Edge Cases: Explicitly evaluate how your system handles missing or ambiguous information.

- Monitor Multiple Metrics: Track both retrieval accuracy and answer quality.

- Compare Configurations: Test different chunk sizes, embedding models, and retrieval parameters.

Conclusion

Production RAG systems need robust evaluation across a spectrum of query types and complexity levels. While manual testing is valuable, synthetic data generation allows you to systematically explore edge cases and potential failure modes at scale.

Remember: the goal isn't just to handle the happy path - it's to build RAG systems that gracefully handle the unexpected queries that inevitably arise in production.

Join our community of synthetic data builders! Share your experiences, ask questions, or get help with your specific use case on the Synthetic Data Discord Community or reach out directly at sales@gretel.ai.